VOTS Benchmark

Organized by tktung - Current server time: Feb. 4, 2026, 8:57 p.m. UTC

Current

Single PhaseMarch 30, 2023, midnight UTC

End

Competition EndsMay 14, 2026, midnight UTC

VOTS Benchmark

Tracking has matured to a point where the constraints enforced in past VOT challenges can be relaxed and general object tracking should be considered in a broader context. The new Visual Object Tracking and Segmentation challenge VOTS2023 thus no longer distincts between single- and multi-target tracking nor between short- and long-term tracking. We propose a single challenge that requires tracking one or more targets simultaneously by segmentation over long or short sequences, while the targets may disappear during tracking and reappear later in the video.

The VOTS2023 challenge took place between May 4th and June 18th 2023. After the results have been processed and analyzed by the VOTS2023 challenge committee, 47 tracker were accepted to the VOTS2023 challenge official leaderboard. To facilitate development the challenge has reopened as a VOTS2023 benchmark for the research community with the VOTS2023 challenge trackers indicated in the public leaderboard. The results analysis was presented at VOTS2023 workshop at ICCV2023, October 3rd 2023 <https://www.votchallenge.net/vots2023/program.html>

The VOTS2023 Benchmark opened after the VOTS2023 challenge to allow continual evaluation and comprehensive benchmarking of modern trackers. The trackers originally submitted to VOTS2023 challenge are denoted in the VOTS2023 Benchmark leader board with the word "VOTS2023" in the tracker name.

Problem statement

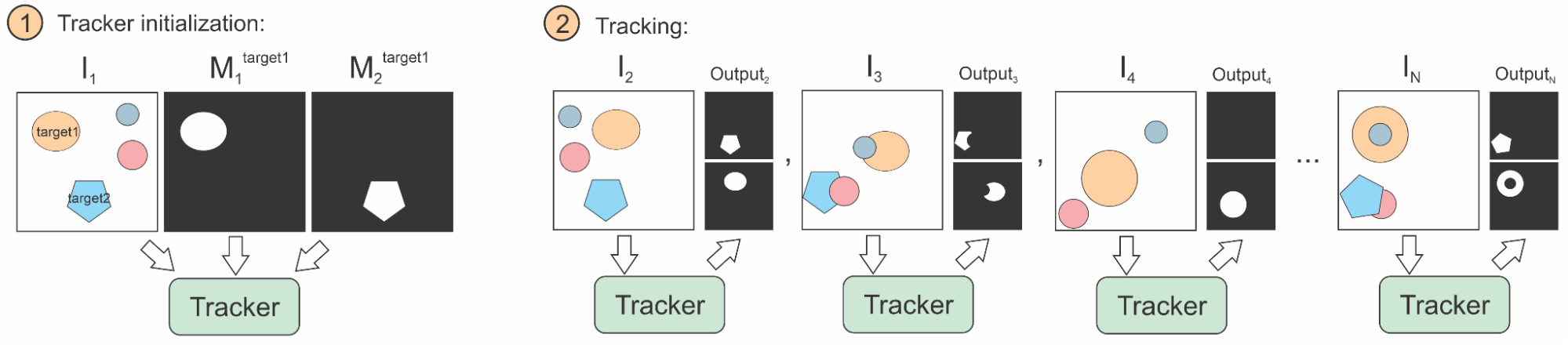

VOTS adopts a general problem formulation that covers single/multiple-target and short/long-term tracking as special cases. The tracker is initialized in the first frame by segmentation masks for all tracked targets. In each subsequent frame, the tracker has to report all segmentation masks (one for each target). The following figure summarizes the tracking task.

What’s new

- A single challenge unifies single/multi-target short/long-term segmentation tracking tasks.

- A new multi-target segmentation tracking dataset.

- A new performance evaluation protocol and measures.

- All trackers will be evaluated on a sequestered ground truth.

- Participate by running your single-target or multiple-target tracker (same challenge)

Participation steps

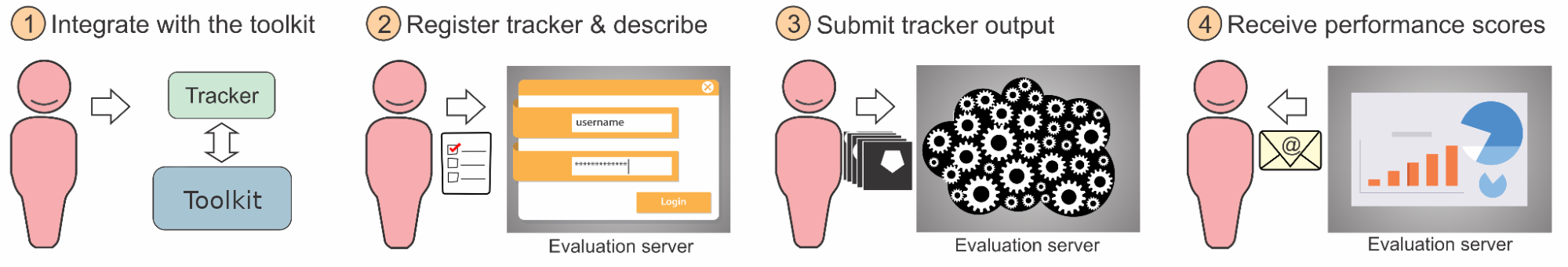

- Follow the guidelines to integrate your tracker with the new VOT toolkit and run the experiments.

- Register your tracker on the evaluation server page, fill-out the tracker description questionnaire and submit the tracker description documents: a short description for the results paper and a longer description (see explanations below).

- Once registered, submit the output produced by the toolkit (see toolkit tutorial) to the evaluation server (this current page). Do not forget to pack the results with the

vot packcommand. Make sure that in the tracker identifier in the manifest.yml (by default is inside the output zip file) match with the tracker short name you register through our Google Form. - Submit your zip file in Participate tab.

- Receive performance scores.

Important note

- For each submission, the evaluation will run for roughly 45 minutes to 1 hours and 30 minutes, sometimes it can take even longer depends on server loads.

- After 10 succesful submissions (meaning necessary files are submitted, input structure of your zip file is correct, etc) you will not receive evaluation results for any of your submission after that.

- Each succesful submission needs to be at least 24h apart from each other to mitigate overfitting attempts.

- Each submission that resulted in an error will not be count toward the limit of 10 succesful submissions.

- Authors are encouraged to submit their own previously published or unpublished trackers.

- Authors may submit modified versions of third-party trackers. The submission description should clearly state what the changes were. Third-party trackers submitted without significant modification will not be accepted.

FAQ

-

Does the number of targets change during tracking?

All targets in the sequence are specified in the first frame. During tracking, some targets may disappear and possibly reappear later. The number of targets is different from sequence to sequence.

-

Can I participate with a single-target tracker?

Sure, with a slight adjustment. You will write a wrapper that creates several independent tracker instances, each tracking one of the targets. To the toolkit, your tracker will be a multi-target tracker, while internally, you’re running independent trackers. See the example here.

-

Can I participate with a bounding box tracker?

Sure, with a slight extension. In previous VOT challenges we showed that box trackers achieve very good performance on segmentation tasks by running a general segmentation on top of a bounding box. So you can simply run AlphaRef (or a similar box refinement module like SAM) on the top of your estimated bounding box to create the per-target segmentation mask. Running a vanilla bounding box tracker is possible, but its accuracy will be low (robustness might still be high).

-

Which datasets can I use for training?

Validation and test splits of popular tracking datasets are NOT allowed for training the model. These include: OTB, VOT, ALOV, UAV123, NUSPRO, TempleColor, AVisT, LaSOT-val, GOT10k-val, GOT10k-test, TrackingNet-val/test, TOTB. Other than above, training splits of any dataset is allowed (including LaSOT-train, TrackingNet-train, YouTubeVOS, COCO, etc.). For including the transparent objects, it is allowed to use the Trans2k dataset.

-

Which performance measures are you using?

New performance measures are developed for the VOTS challenges, here is a draft.

-

When will my results be publicly available?

The results for a registered tracker are immediately revealed to the participant via an email. But these results will not be publicly disclosed until after the VOTS2023 workshop. At that point, a public VOTS2023 challenge leaderboard will appear on the VOTS webpage.

More questions?

Questions regarding the VOTS2023 challenge should be directed to the VOTS2023 committee. If you have general technical questions regarding the VOT toolkit, consult the FAQ page and the VOT support forum first.

Single Phase

Start: March 30, 2023, midnight

Description: Result submission

Competition Ends

May 14, 2026, midnight

You must be logged in to participate in competitions.

Sign In| # | Username | Score |

|---|---|---|

| 1 | VOTS2025_S3-DAM4SAM | 0.75 |

| 2 | VOTS2024_S3_Track | 0.72 |

| 3 | VOTS2025_DAM4SAM | 0.71 |