VOTS-RT Benchmark

Organized by tktung - Current server time: Jan. 27, 2026, 6:56 a.m. UTC

Previous

Single PhaseJuly 14, 2025, midnight UTC

Current

Single PhaseJuly 14, 2025, midnight UTC

End

Competition EndsJune 23, 2025, 4:59 p.m. UTC

VOTS-RT Benchmark

Visual Object Tracking and Segmentation challenge VOTS2025 was a continuation of the VOTS2023 and VOTS2024 challenges, which no longer distinct between single- and multi-target tracking nor between short- and long-term tracking. It requires tracking one or more targets simultaneously by segmentation over long or short sequences, while the targets may disappear during tracking and reappear later in the video.

Three challenges were organized:

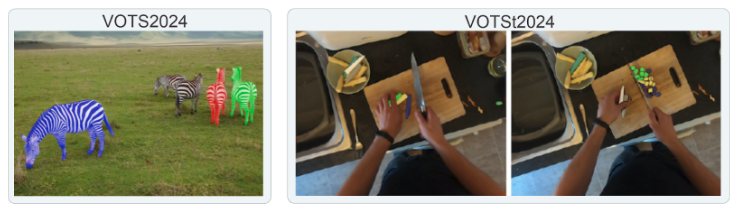

- VOTS2025 challenge - Continuation of the VOTS2023 and VOTS2024 challenges. The task is to track one or more general targets over short-term or long-term sequences by segmentation.

- VOTS2025-RT challenge and the continuation VOTS-RT Benchamark (this current page) - The real-time version of the main VOTS2025 challenge. The task is to track one or more general targets over short-term or long-term sequences by segmentation in a real-time setup, i.e. frames are given to the tracker in a constant frame-rate, regardless of the tracker latency.

- VOTSt2025 challenge - Continuation of the VOTSt2024 challenge considers general objects undergoing a topological transformation, such as vegetables cut into pieces, machines disassembled, etc.

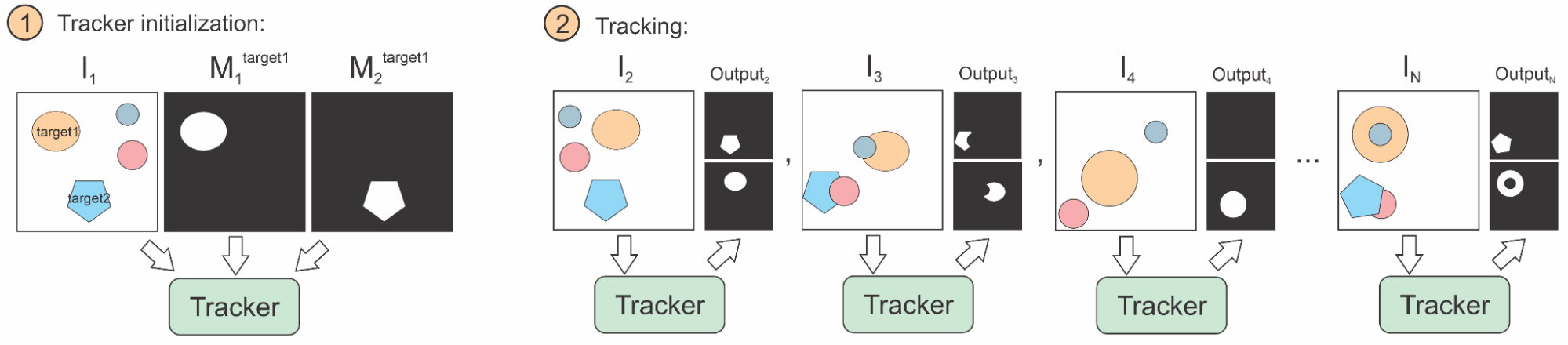

Problem statement

VOTS adopts a general problem formulation that covers single/multiple-target and short/long-term tracking as special cases. The tracker is initialized in the first frame by segmentation masks for all tracked targets. In each subsequent frame, the tracker has to report all segmentation masks (one for each target). The following figure summarizes the tracking task.

Researchers are invited to participate in two challenges: VOTS2025 and VOTSt2025. The difference between the two challenges is that VOTS2025 considers objects undergoing a topological transformation, such as vegetables cut into pieces, machines disassembled.

Sponsors

The VOTS2025 challenge is sponsored by the Faculty of Computer and Information Science, University of Ljubljana, The Academic and Research Network of Slovenia - ARNES, University of Birmingham, and Wallenberg AI - Autonomous Systems and Software Program WASP.

VOTS-RT Benchmark participation

-

The VOTS-RT is a real-time benchmark run on the same dataset as the main VOTS2025 challenge. The difference to the main challenge is that the toolkit gives the frames to the tracker in constant (20fps) time intervals to simulate the real-time constraint.

-

Run your tracker by creating a workspace using the command

vot initialize vots2025/realtimein the toolkit and submit the output masks to the evaluation server. Note that if you attent the real-time challenge, the baseline experiment (without any time constraint) will be automatically performed as well. Important: the real-time challenge supports only simultaneous tracker outputs, i.e., a tracker should report positions (masks) for all objects on an individual frame before the next frame is obtained. -

Register your tracker on the VOTS-RT Benchmark registration page, fill-out the tracker description questionnaire and submit the tracker description documents: a short description for the results paper and a longer description.

-

Once registered, submit the output produced by the toolkit (see tutorial) to the VOTS-RT Benchmark evaluation server. Do not forget to pack the results with the

vot packcommand. -

Note: For additional analysis insight, the toolkit will also run the tracker automatically in the VOTS Benchmark (i.e., without realtime constraint) to measure performance drop due to real-time constraint.

Local hardware specifications

- NVIDIA RTX 4500 Ada GPU with 24GB of VRAM

- Intel i9-13900K CPU and 128GB of RAM

- OS: Debian 6.1.119

- CUDA 12.4.0

- The source code should be prepared for integration with the virtualenv Python package management system

Result submission

Follow this and this for how to create your submission. Do not forget to pack the results with the vot pack command.

Make sure that in the tracker identifier in the manifest.yml (by default is inside the output zip file) match with the tracker short name you register through our Google Form.

Then submit your zip file in Participate tab. Note that uploading the zip file can take a long time, as the file may be large in size, and some private network (e.g., company wifi) will not allow to upload file to the challenge page.

For each submission, the evaluation will run for roughly 45 minutes to 1 hours and 30 minutes, sometimes it can take even longer depends on server loads. To avoid bottlenecking the server, you should try to submit earlier, especially when the deadline is close.

Additional clarifications

- The short tracker description should contain a concise description (LaTeX format) for the VOT results paper appendix (see examples in Appendix of a VOT results papers). The longer description will be used by the VOTS TC for result interpretation. Write the descriptions in advance to speed up the submission process.

- Results for a single registered tracker may be submitted to the evaluation server at most 10 times, each at least 24h apart to mitigate overfitting attempts. In response to submissions >10, an email with Subject “Maximum number of VOTS submissions reached” will be sent to avoid confusion about the situation. Registering a slightly modified tracker to increase the number of server evaluations is prohibited. The VOTS committee reserves the discretion to disqualify trackers that violate this constraint. If in doubt whether a modification is “slight”, contact the VOTS committee.

- Submissions resulting in evaluation error do not count into the limit on max submissions.

- The results of the last submission will be taken into account and previous ones deleted. Make sure that the tracker code you link reproduces the last submission results.

- When coauthoring multiple submissions with similar design, the longer description should refer to the other submissions and clearly expose the differences. If in doubt whether a change is sufficient enough, contact the organisers.

- The participant can update information about the tracker (name, description, etc.) anytime before the challenge closes.

- Only a single eu.aihub.ml account is allowed per tracker.

- Authors are encouraged to submit their own previously published or unpublished trackers.

- Authors may submit modified versions of third-party trackers. The submission description should clearly state what the changes were. Third-party trackers submitted without significant modification will not be accepted.

- The VOTS2024 challenge winner is required to publicly release the pertained tracker and the source code. In case private training sets are used, the authors are strongly encouraged to make the dataset publicly available to foster results reproducibility.

Tracker registration checklist (prepare in advance)

- Make sure you selected the correct link depending whether you’re submitting to the VOTS2025, VOTS2025-RT or the VOTSt2025 challenge.

- Authors, affiliations + emails, and division of work.

- Make sure that the tracker identifier in the manifest.yml (inside the results output zip file) match with the tracker short name you registered (in the registration form).

- Short tracker description for the results paper appendix. See examples in the VOT2022 results paper. (~800 characters with spaces when compiled, which is ~1500 characters of LaTeX text without bibtex file)

- Long tracker description (should detail the main ideas).

- Bibtex file for the long and short tracker description.

- A link to the tracker code placed in a persistent depository (Github, dropbox, Gdrive,…). If the link is not yet publicly accessible, provide a password. Note that to become a co-author of the results paper, the tracker has to be publicly accessible by the VOTS2024 workshop date.

FAQ

-

Can I participate with a single-target tracker?

Sure, in fact, in the past years, most of top-performers were single-target trackers. This year we even simplified integration for the single-target trackers by introducing the sequential mode. An example showing this case is available here. Note: sequential mode does not support the real-time experiment, so you will be able to participate in the main VOTS2025 Challenge only.

-

Does the number of targets change during tracking?

All targets in the sequence are specified in the first frame. During tracking, some targets may disappear and reappear later. The number of targets is different from sequence to sequence.

-

Can I participate with a bounding box tracker?

Sure, with a slight extension. In previous VOT challenges we showed that box trackers achieve very good performance on segmentation tasks by running a general segmentation on top of a bounding box. So you can simply run AlphaRef (or a similar box refinement module like SAM) on the top of your estimated bounding box to create the per-target segmentation mask. Running a vanilla bounding box tracker is possible, but its accuracy will be low (robustness might still be high).

-

Which datasets can I use for training?

Validation and test splits of popular tracking datasets are NOT allowed for training the model. These include: OTB, VOT, ALOV, UAV123, NUSPRO, TempleColor, AVisT, LaSOT-val, GOT10k-val, GOT10k-test, TrackingNet-val/test, TOTB. Other than above, training splits of any dataset is allowed (including LaSOT-train, TrackingNet-train, YouTubeVOS, COCO, etc.). For including the transparent objects, it is allowed to use the Trans2k dataset. In case private training sets are used, we strongly encourage making them publicly available for results reproduction.

-

Which performance measures are you using?

The VOTS2023 performance measures are used in both VOTS2025 and VOTSt2025 challenges, see the VOTS2023 results paper.

-

When will my results be publicly available?

The results for a registered tracker are revealed to the participant via an email in approximately 30 minutes after submission. Considering many requests, we decided to also reveal all results in the week after the challenge closes. The leaderboard data will contain also tracker registration details (without participants personal details, long tracker description and source code password). Note that public link to the source code is mandatory for the results paper coauthorship, but can be kept under password (revealed only to VOTS committee) until the VOTS workshop.

-

Why is the analysis computed with the toolkit empty?

The VOTS2025 and VOTSt2025 evaluation datasets contain annotations for initialization frame only, which means that the analysis cannot be computed locally by the toolkit. Thus, the results should be submitted to the server, where analysis is computed and then reported to the user via email.

-

If I submit several timest to the evaluation server, which submission will be used for the final score?

The final submission will be used for the final score. Please make sure that the tracker description matches the code that produced the final submission.

-

Will the evaluation server remain open after the VOTS2025 deadline?

After the challenge deadline, the VOTS2025 and VOTSt2025 challenges become the VOTS2025 and VOTSt2025 benchmarks and the evaluation server will remain open. In fact, the VOTS2023 and VOTS2024 challenge results will be added to the VOTS2025 results. The results submission link on the challenge page will change to enable post-challenge submissions not included in the VOTS2025 results paper. However, all benchmark and challenge submissions will appear on the same leaderboard

More questions?

Questions regarding the VOTS-RT Benchmark should be directed to the VOTS committee. If you have general technical questions regarding the VOT toolkit, consult the FAQ page and the VOT support forum first. Stay tuned with the latest VOT updates: Follow us on Twitter.

Single Phase

Start: July 14, 2025, midnight

Description: Result submission

Competition Ends

June 23, 2025, 4:59 p.m.

You must be logged in to participate in competitions.

Sign In| # | Username | Score |

|---|---|---|

| 1 | VOTS2025-RT_DQASOTr | 0.67 |

| 2 | VOTS2025-RT_SV-DAM4SAM | 0.71 |

| 3 | VOTS2025-RT_DAM4SAMLight | 0.61 |